Kernel density estimation

Matthieu Bloch

Kernel Density Estimation

- Density estimation problem: given samples \(\{\bfx_i\}_{i=1}^N\) from an unknown density \(f(\bfx)\), estimate \(f(\bfx)\)

- Applications: classification, clustering, anomaly detection, etc.

Kernel density estimation

- General form of kernel density estimate \[\hat{f}(\bfx) = \frac{1}{N}\sum_{i=1}^N k_{\sigma}(\bfx-\bfx_i)\]

- \(k_{\sigma}\) is called a kernel

- Estimate is non parametric, also known as Parzen window method

- \(\sigma\) is the bandwidth

- Looks like a ridge regression kernel but with equal weights and need not be inner product kernel

- A kernel should satisfy the following

- \(\int k_{\sigma}(\bfx)d\bfx=1\)

- \(k_{\sigma}(\bfx)\geq 0\)

- \(k_{\sigma}(\bfx) = \frac{1}{\sigma^d}D(\frac{\norm{\bfx}}{\sigma})\) for some \(D(\cdot)\)

- Plenty of standard kernels: rectangular, Gaussian, etc.

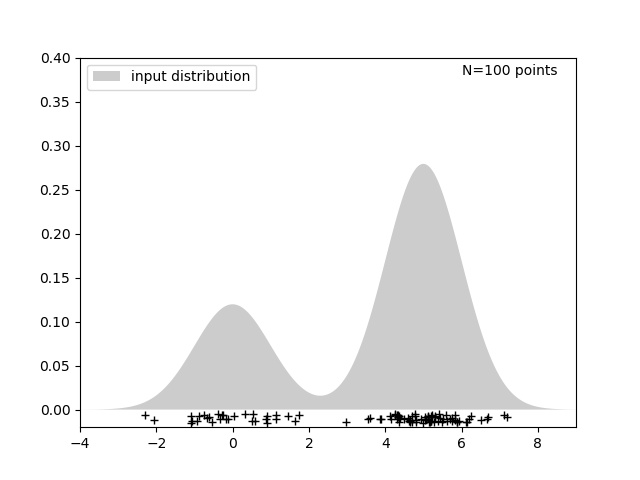

Demo kernel density estimation

How do we choose \(\sigma\)?

Kernel density estimation

- Let \(\hat{f}_\sigma(\bfx)\) be a kernel density estimate based on kernel \(k_\sigma\). Suppose we scale \(\sigma\) with \(N\) as

- \(\lim_{N\to\infty} \sigma_N=0\)

- \(\lim_{N\to\infty} N \sigma_N^d=\infty\) Then \(\lim_{N\to\infty}\E{\int\abs{\hat{f}_\sigma(\bfx)-f(\bfx)}d\bfx}=0\)

Seems like a very powerful result, kernel density estimation always works given enough points

- In practice, choose \(\sigma_N\approx 1.06 \hat{\sigma}N^{-\frac{1}{5}}\) (Silverman’s rule of thumb)

- Can also use model selection techniques (split dataset for testing and training)

Ugly truth: kernel density estimation works well with a lot of points in low dimension