Probably Approximately Correct Learnability

Matthieu R Bloch

A simpler supervised learning problem

Consider a special case of the general supervised learning problem

- Dataset \(\calD\eqdef\{(\bfx_1,y_1),\cdots,(\bfx_N,y_N)\}\)

- \(\{\bfx_i\}_{i=1}^N\) drawn i.i.d. from unknown \(P_{\bfx}\) on \(\calX\)

- \(\{y_i\}_{i=1}^N\) labels with \(\calY=\{0,1\}\) (binary classification)

Unknown \(f:\calX\to\calY\), no noise.

Finite set of hypotheses \(\calH\), \(\card{\calH}=M<\infty\)

- \(\calH\eqdef\{h_i\}_{i=1}^M\)

Binary loss function \(\ell:\calY\times\calY\rightarrow\bbR^+:(y_1,y_2)\mapsto \indic{y_1\neq y_2}\)

- In this very specific case, the true risk simplifies \[ R(h)\eqdef\E[\bfx y]{\indic{h(\bfx)\neq y}} = \P[\bfx y]{h(\bfx)\neq y} \]

- The empirical risk becomes \[ \widehat{R}_N(h)=\frac{1}{N}\sum_{i=1}^{N} \indic{h(\bfx_i)\neq y_i} \]

Can we learn?

Our objective is to find a hypothesis \(h^*=\argmin_{h\in\calH}\widehat{R}_N(h)\) that ensures a small risk

For a fixed \(h_j\in\calH\), how does \(\widehat{R}_N(h_j)\) compares to \({R}(h_j)\)?

- Observe that for \(h_j\in\calH\)

- The empirical risk is a sum of iid random variables \[ \widehat{R}_N(h_j)=\frac{1}{N}\sum_{i=1}^{N} \indic{h_j(\bfx_i)\neq y_i} \]

- \(\E{\widehat{R}_N(h_j)} = R(h_j)\)

\(\P{\abs{\widehat{R}_N(h_j)-{R}(h_j)}>\epsilon}\) is a statement about the deviation of a normalized sum of iid random variables from its mean

We’re in luck! Such bounds, a.k.a, known as concentration inequalities, are a well studied subject

Concentration inequalities: basics

Let \(X\) be a non-negative real-valued random variable. Then for all \(t>0\) \[\P{X\geq t}\leq \frac{\E{X}}{t}.\]

Let \(X\) be a real-valued random variable. Then for all \(t>0\) \[\P{\abs{X-\E{X}}\geq t}\leq \frac{\Var{X}}{t^2}.\]

Let \(\{X_i\}_{i=1}^N\) be i.i.d. real-valued random variables with finite mean \(\mu\) and finite variance \(\sigma^2\). Then \[\P{\abs{\frac{1}{N}\sum_{i=1}^N X_i-\mu}\geq\epsilon}\leq\frac{\sigma^2}{N\epsilon^2}\qquad\lim_{N\to\infty}\P{\abs{\frac{1}{N}\sum_{i=1}^N X_i-\mu}\geq \epsilon}=0.\]

Back to learning

By the law of large number, we know that \[ \forall\epsilon>0\quad\P[\{(\bfx_i,y_i)\}]{\abs{\widehat{R}_N(h_j)-{R}(h_j)}\geq\epsilon}\leq \frac{\Var{\indic{h_j(\bfx_1)\neq y_1}}}{N\epsilon^2}\leq \frac{1}{N\epsilon^2}\]

Given enough data, we can generalize

How much data? \(N=\frac{1}{\delta\epsilon^2}\) to ensure \(\P{\abs{\widehat{R}_N(h_j)-{R}(h_j)}\geq\epsilon}\leq \delta\).

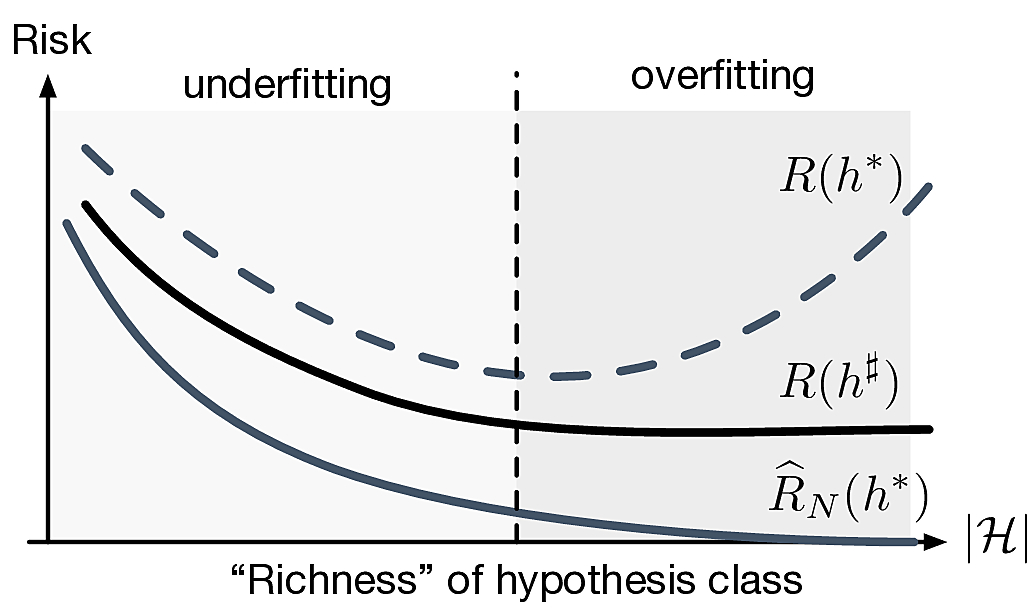

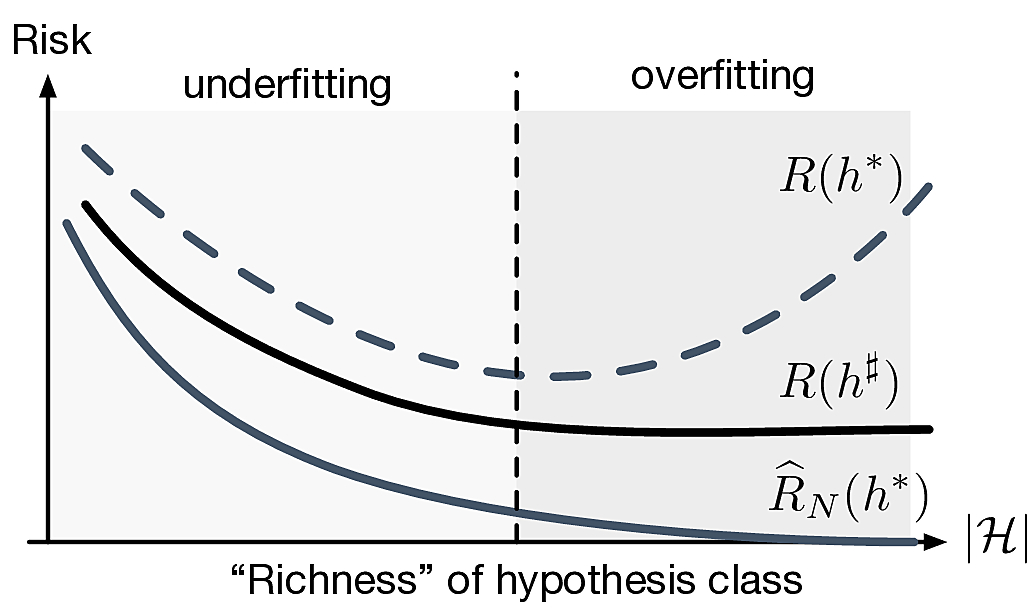

- That’s not quite enough! We care about \(\widehat{R}_N(h^*)\) where \(h^*=\argmin_{h\in\calH}\widehat{R}_N(h)\)

- If \(M=\card{\calH}\) is large we should expect the existence of \(h_k\in\calH\) such that \(\widehat{R}_N(h_k)\ll R(h_k)\)

- \[ \P{\abs{\widehat{R}_N(h^*)-{R}(h^*)}\geq\epsilon} \leq \P{\exists j:\abs{\widehat{R}_N(h_j)-{R}(h_j)}\geq\epsilon} \]

- \[ \P{\abs{\widehat{R}_N(h^*)-{R}(h^*)}\geq\epsilon} \leq \frac{M}{N\epsilon^2} \]

- If we choose \(N\geq\lceil\frac{M}{\delta\epsilon^2}\rceil\) we can ensure \(\P{\abs{\widehat{R}_N(h^*)-{R}(h^*)}\geq\epsilon}\leq \delta\).

- That’s a lot of samples!

Concentration inequalities: not so basic

We can obtain much better bounds than with Chebyshev

Let \(\{X_i\}_{i=1}^N\) be i.i.d. real-valued zero-mean random variables such that \(X_i\in[a_i;b_i]\) with \(a_i<b_i\). Then for all \(\epsilon>0\) \[\P{\abs{\frac{1}{N}\sum_{i=1}^N X_i}\geq\epsilon}\leq 2\exp\left(-\frac{2N^2\epsilon^2}{\sum_{i=1}^N(b_i-a_i)^2}\right).\]

- In our learning problem \[ \forall\epsilon>0\quad\P{\abs{\widehat{R}_N(h_j)-{R}(h_j)}\geq\epsilon}\leq 2\exp(-2N\epsilon^2)\]

- \[ \forall\epsilon>0\quad\P{\abs{\widehat{R}_N(h^*)-{R}(h^*)}\geq\epsilon}\leq 2M\exp(-2N\epsilon^2)\]

- We can now choose \(N\geq \lceil\frac{1}{2\epsilon^2}\left(\ln \frac{2M}{\delta}\right)\rceil\)

- \(M\) can be quite large (almost exponential in \(N\)) and, with enough data, we can generalize \(h^*\).

How about learning \(h^{\sharp}\eqdef\argmin_{h\in\calH}R(h)\)?

Learning can work!

If \(\forall j\in\calH\,\abs{\widehat{R}_N(h_j)-{R}(h_j)}\leq\epsilon\) then \(\abs{R(h^*)-{R}(h^\sharp)}\leq 2\epsilon\).

- How do we make \(R(h^\sharp)\) small?

- Need bigger hypothesis class \(\calH\)! (could we take \(M\to\infty\)?)

- Fundamental trade-off of learning

Probably Approximately Correct Learnability

- A hypothesis set \(\calH\) is (agnostic) PAC learnable if there exists a function \(N_\calH:]0;1[^2\to\bbN\) and a learning algorithm such that:

- for very \(\epsilon,\delta\in]0;1[\),

- for every \(P_\bfx\), \(P_{y|\bfx}\),

- when running the algorithm on at least \(N_\calH(\epsilon,\delta)\) i.i.d. examples, the algorithm returns a hypothesis \(h\in\calH\) such that \[\P[\bfx y]{\abs{{R}(h)-R(h^\sharp)}\leq\epsilon}\geq 1-\delta\]

The function \(N_{\calH}(\epsilon,\delta)\) is called sample complexity

We have effectively already proved the following result

A finite hypothesis set \(\calH\) is PAC learnable with the Empirical Risk Minimization algorithm and with sample complexity \[N_\calH(\epsilon,\delta)={\lceil{\frac{2\ln(2\card{\calH}/\delta)}{\epsilon^2}}\rceil}\]

What is a good hypothesis set?

Ideally we want \(\card{\calH}\) small so that \(R(h^*)\approx R(h^\sharp)\) and get lucky so that \(R(h^*)\approx 0\)

- In general this is not possible

- Remember, we usually have to learn \(P_{y|\bfx}\), not a function \(f\)

- Questions

- What is the optimal binary classification hypothesis class?

- How small can \(R(h^*)\) be?