Linear Representations

Dr. Matthieu R Bloch

Wednesday, August 25, 2021

Logistics

- Enrollment update: 177 in person + 29 online (2nd biggest course in ECE!)

- Might still be a few seats left to grab!

- Sign up for piazza! (124 enrolled @ 10am) Piazza

- Lecture resources

- My slides + your notes + my notes

- Prof. Romberg’s notes: https://jrom.ece.gatech.edu/mfml-f20-notes/

- Recommended textbook: Marc Peter Deisenroth et al., Mathematics for Machine Learning, Cambridge University Press, (2019)

- Self assessment posted!

- Due Monday August 30, 2021 (+48 hour grace period if forfeiting bonus)

- Open everything, collaboration widely encouraged, ask questions on Piazza

- Upload on Gradescope

- Graduate teaching assistants + office hours

- Information to be posted on Canvas

Today’s class

Objective 1: ease into vector spaces of functions

- Why it matters to ML

- Why you might have seen it already in some form

- Why we’ll need linear algebra!

Objective 2: write a few proofs together

- How to write clean proofs

Representation and approximation in ML

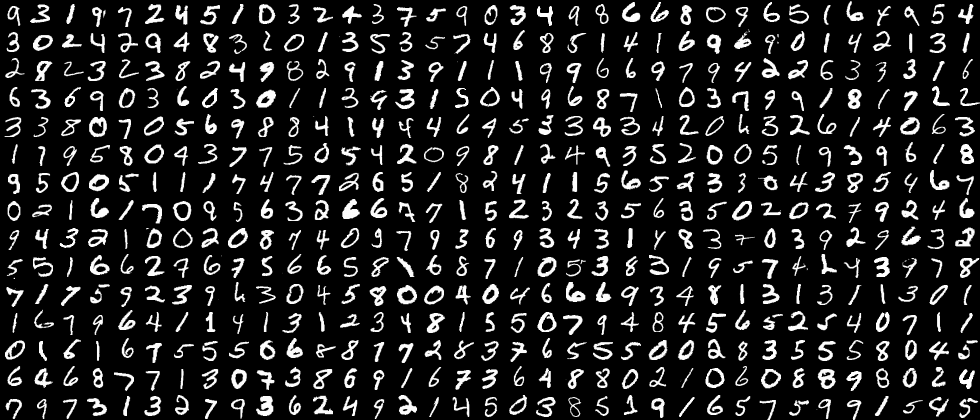

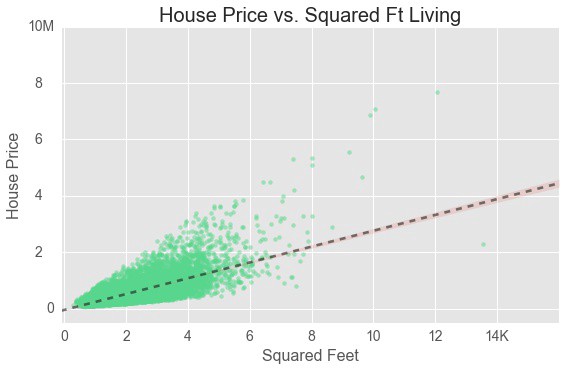

- Regression and classification in machine learning

- Many problems reduce to fitting a function \(f:\bbR^d\to\bbR\) to a data \(\set{(\bfx_i,y_i)}_{i=1}^n\)

- \(f\) is the unknown quantity, and we want \(f(\bfx_i)\approx y_i\) so that we can predict \(f(\bfx)\) for every \(\bfx\in\bbR^d\)

- Classification: predict a label - Regression: predict a real number

Fundamental question: how do we represent a function in a computer?

- A computer only stores a sequence of (quantized) real values

- What does it mean to “store”a function, say \(f(x)=\sin x\)

Solution: use a basis expansion \(f(\bfx)\approx\sum_{i=1}^m\alpha_i h_i(\bfx)\)

- \(\set{h_i}_{i=1}^m\) are called basis functions (how do we choose them?)

- The approximation is called a linear basis expansion

- Representing \(f\) amounts to finding \(\set{\alpha_i}_{i=1}^M\) - we’ll try to formalize this later

Example: Polynomials

There is a natural “basis” for representing polynomials of degree \(i\) \(p(x) = \sum_{i=0}^m \alpha_i x^i\)

- The monomials \(\set{x^i}_{i=1}^m\) can be combined to describe any polynomial of degree less than or equal to \(m\)

- The degree \(m\) controls the “richness” or “complexity” the functions we can describe

Question: which functions can be described as such?

Analytic functions on open set \(\calD\): \(f(x)=\sum_{i=0}^\infty \alpha_i (x-x_0)^i\) for every \(x\in\calD\).

\[ e^x = \sum_{i=0}^\infty \frac{1}{i!}x^i\quad \log(1+x)=\sum_{i=0}^\infty\frac{(-1)^{i+1}}{k}x^k\quad \sin x = \sum_{i=0}^\infty \frac{(-1)^i}{(2i+1)!}x^{2i+1} \]Taylor series

Let \(k\geq 1\) and let \(f:\bbR\to\bbR\) be in \(k\) times differentiable at a point \(a\in\bbR\). There exists \(h_k:\bbR\to\bbR\) such that \(\lim_{x\to a}h_k(x)=0\) and \[ f(x) = \sum_{i=0}^k \frac{f^{(i)}(a)}{i!}(x-a)^i + h_k(x)(x-a)^k. \]

Example: Lagrange Polynomials

Question: How do we find a basis expansion given \(\set{(x_i,y_i)}_{i=1}^n\)?

Lagrange polynomials \[ p(x) = \sum_{j=1}^{n} \alpha_j L_j(x)\qquad L_j(x)\eqdef \prod_{1\leq i\leq n, i\neq j}\frac{x-x_i}{x_j-x_i} \]

Given \(n\geq 1\) distinct points \(\set{(x_i,y_i)}_{i=1}^n\), there exists a unique degree \(n-1\) interpolating polynomial.

Example: Polynomial splines

- B-splines: the splines can be viewed as linear basis expansion on a basis called B-splines

- What are the B-splines for \(\ell=0\)?

- What are the B-splines for \(\ell=1\)?

- What are the B-splines for \(\ell=2\)?

- Moving forward, we will need linear algebra to describe this precisely and systematically

- How do we compute a linear basis expansion?

- What is a good basis for machine learning?

- How do we measure the quality of approximation?