Mathematical Foundations of Machine Learning

Prof. Matthieu Bloch

Monday, December 2, 2024

Last time

- Last class: Monday November 25, 2024

- We talked about Maximum likelihood estimation

- Today: We will talk about principal component

analysis

- Great way to end the course where we started

- To be effectively prepared for today's class, you should have:

Final exam is coming

- Review all notes and exams: exam is comprehensive

- Friday December 6, 2024 2:40pm-5:30pm (see https://registrar.gatech.edu/info/final-exam-matrix-fall-2024)

- In class and open notes

- Same rules as for the midterm with electronics

- Frequently asked questions

- How many problems on the midterm?

- How many questions on the midterm?

- What topics will the midterm cover?

- Do you have sample exams?

- Can we do additional work for extra credit?

- Do you give bonuses for participation or for CIOS completion?

- We will be very available for help and review

sessions

- Tuesday December 03, 2024 12pm: Anuvab (hybrid)

- Wednesday December 04, 2024 9am: Dr. Bloch (online)

- Wednesday December 04, 2024 11:30am: Jack (online)

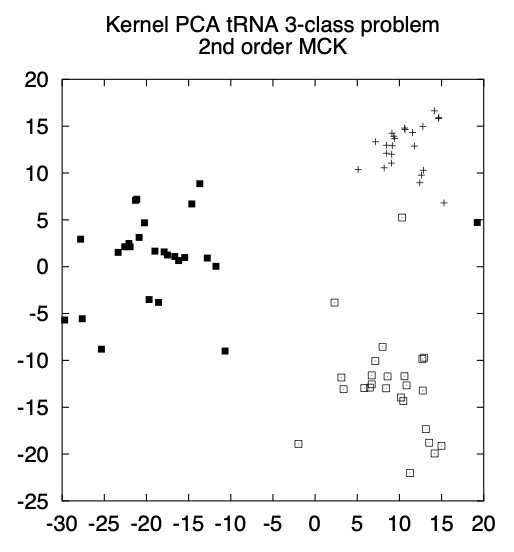

Back to first lecture: Kernel PCA

- tRNA (transfer RNA): plays a key role in the

creation of amino acid sequence of proteins (source)

- G G G G A A T T A G C T C A A G C G G T A G A G C G …

- Challenge: compare, classify, analyze, visualize

sequences

- Datasets of tRNA sequences \(\set{\bfx_i}_{i=1}^n\)

- Lots happening behind the scene

- What does it mean to represent the data in 2D?

- How do we measure distances between tRNA sequences?

- We can explain a lot now!

Principal Component Analysis

- Feature extraction methods based: unsupervised, linear, based on sum of square errors

- Idea is to find approximation of data as \[ \bfx_i \approx \bfmu + \bfA\bftheta_i\textsf{ with }\bfmu\in\bbR^d,\bfA\in\bbR^{d\times k},\bftheta_i\in\bbR^{k} \] and \(\bfA\) has orthonormal columns

Principal Component Analysis consists in solving the problem \[\argmin_{\bfmu,\bfA,\bftheta_i}\sum_{i=1}^N\norm[2]{\bfx_i-\bfmu-\bfA\bftheta_i}^2\]

- Hard part is finding \(\bfA\)

- Given \(\bfA\), relatively easy to find \(\bftheta_i\) and \(\bfmu\)

Solving PCA

Assume that \(\bfmu\) and \(\bfA\) are fixed. Then, \[\bftheta_i=\bfA^{\intercal}(\bfx_i-\bfmu)\]

Assume \(\bfA\) is fixed and \(\bftheta_i = \bfA^\intercal(\bfx_i-\bfmu)\). Then, \[\bfmu=\frac{1}{N}\sum_{i=1}^N\bfx_i\]

Solving PCA

One possible choice of \(\bfA\) is \[\bfA=[\bfu_1,\cdots,\bfu_k]\] where \(\bfu_i\)'s are the eigenvectors corresponding to the \(k\) largest eigenvalues of \(\bfS\eqdef\sum_{i=1}^N\bfx_i\bfx_i^\intercal\)

- Proof steps

- Step 1: introduce \(\bfS\eqdef\sum_{i=1}^N\bfx_i\bfx_i^\intercal=\bfX\bfX^\intercal\)

- Step 2: introduce linear program

- Step 3: solve linear program

- Connection to SVD \(\bfX = \bfU\Sigma \bfV^\dagger\) where columns of \(\bfX\) are \(\bfx_i-\bfmu\): \[ \bfX = \underbrace{\mat{ccc}{\vert & & \\ \bfA & & \\ \vert & &}}_{\bfU}\underbrace{\mat{c}{-\Theta-\\ \\ }}_{\Sigma\bfV^\dagger} \]