Mathematical Foundations of Machine Learning

Prof. Matthieu Bloch

Wednesday August 28, 2024 (v1.3)

Last time

- Last class: Monday August 26, 2024

- We talked about Hilbert spaces, inner product, completeness

- To be effectively prepared for today's class, you should

have:

- Gone over the previous slides and read associated lecture notes

- Made sure you have access to Canvas, Piazza, Gradescope

- Spent a bit of time on Homework 1

- due date: Tuesday September 3, 2024 (updated to account for Labor day)

- It's a fast turnover but you'll have more time for future homework

- Work on Problem 2 last

Infinite dimensions

In infinite dimensions, things are a little bit tricky. What does the following mean? \[ x(t) = \sum_{n=1}^\infty \alpha_n\psi_n(t) \]

We need to define a notion of convergence, e.g., \[ \lim_{N\to\infty}\norm{x(t)-\sum_{n=1}^N \alpha_n\psi_n(t)}=0 \]

Problems can still arise if "points are missing"

We avoid this by introducing the notion of completeness

Hilbert space

A sequence \(\set{x_n}_{n\geq 0}\) in \(\calV\) is Cauchy if \(\forall\epsilon>0\) \(\exists N\in\bbN^*\) such that \(\forall n,m>N\) \(\norm{x_n-x_m}\leq \epsilon\)

A Hilbert space \(\calH\) is a complete inner product space, i.e., an inner product space in which every Cauchy sequence in \(\calH\) converges to a point in \(\calH\)

- Intuition: a Hilbert space has no "missing point"

- A finite dimensional inner product space is complete (many Hilbert spaces are infinite dimensional!)

- We won't worry too much about proving that spaces are complete

- A complete normed vector space is a Banach space; a lot of things are still possible without an inner product

Orthogonality principle

- Let \(\calH\) be a Hilbert space with inner product \(\dotp{\cdot}{\cdot}\) and induced norm \(\norm{\cdot}\) ; let \(\calT\) be a subspace of \(\calH\)

- For \(x\in\calH\), what is the closest point \(\hat{x}\in\calT\)? How do we solve \(\min_{y\in\calT}\norm{x-y}\)?

- This problem has a unique solution given by the orthogonality principle

Let \(\calX\) be a pre-Hilbert space, \(\calT\) be a subspace of \(\calX\), and \(x\in\calX\).

If there exists a vector \(m^*\in\calT\) such that \(\forall m\in\calT\) \(\norm{x-m^*}\leq \norm{x-m}\), then \(m^*\) is unique.

\(m^*\in\calT\) is a unique minimizer if and only if the error \(x-m^*\) is orthogonal to \(\calT\).

- This doesn't say that \(m^*\) exists!

Let \(\calH\) be a Hilbert space, \(\calT\) be a closed subspace of \(\calX\), and \(x\in\calX\).

There exists a unique vector \(m^*\in\calT\) such that \(\forall m\in\calT\) \(\norm{x-m^*}\leq \norm{x-m}\).

Computing approximations

- The orthogonality principle gives us a procedure for computing the closest point

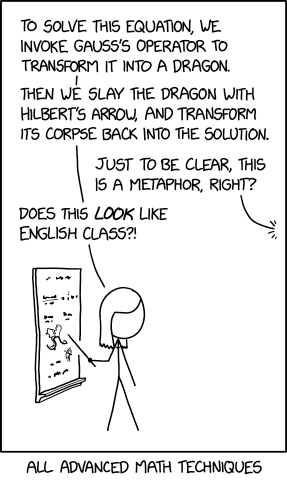

Let \(\calH\) be a Hilbert space, \(\calT\) be a subspace of \(\calH\) with dimension \(n\), and \(x\in\calH\). Let \(\set{e_i}_{i=1}^n\) be a basis for \(\calT\). Then the projection \(\hat{x}\) of \(x\) onto \(\calT\) is \[ \hat{x} = \sum_{i=1}^n\alpha_i e_i \] where \(\bfalpha\eqdef\mat{c}{\alpha_1&\cdots&\alpha_n}^\intercal\) is the solution of \(\bfG\bfalpha=\bfb\) with \(\bfG\) the Grammiam matrix of the basis and \(\bfb\) the coordinates of \(x\) on the basis.

Next time

- Next class: Wednesday September 4, 2024

- We will talk about orthobases

- We will prove a fundamental result called Parseval's theorem

- To be effectively prepared for next class, you

should:

- Go over today's slides and read associated lecture notes

- Work on Homework 1 (due date: Tuesday September 03, 2024)

- Optional

- Export slides for next lecture as PDF (be on the lookout for an announcement when they're ready)